publications

2025

- Leveraging Multispectral Sensors for Color Correction in Mobile CamerasLuca Cogo, Marco Buzzelli, Simone Bianco, Javier Vazquez-Corral, and Raimondo SchettiniarXiv preprint arXiv:2512.08441, 2025

Recent advances in snapshot multispectral (MS) imaging have enabled compact, low-cost spectral sensors for consumer and mobile devices. By capturing richer spectral information than conventional RGB sensors, these systems can enhance key imaging tasks, including color correction. However, most existing methods treat the color correction pipeline in separate stages, often discarding MS data early in the process. We propose a unified, learning-based framework that (i) performs end-to-end color correction and (ii) jointly leverages data from a high-resolution RGB sensor and an auxiliary low-resolution MS sensor. Our approach integrates the full pipeline within a single model, producing coherent and color-accurate outputs. We demonstrate the flexibility and generality of our framework by refactoring two different state-of-the-art image-to-image architectures. To support training and evaluation, we construct a dedicated dataset by aggregating and repurposing publicly available spectral datasets, rendering under multiple RGB camera sensitivities. Extensive experiments show that our approach improves color accuracy and stability, reducing error by up to 50% compared to RGB-only and MS-driven baselines. Datasets, code, and models will be made available upon acceptance.

-

Object Color Relighting with Progressively Decreasing InformationLuca Cogo, Marco Buzzelli, Simone Bianco, and Raimondo SchettiniIn Journal of Physics: Conference Series, 2025

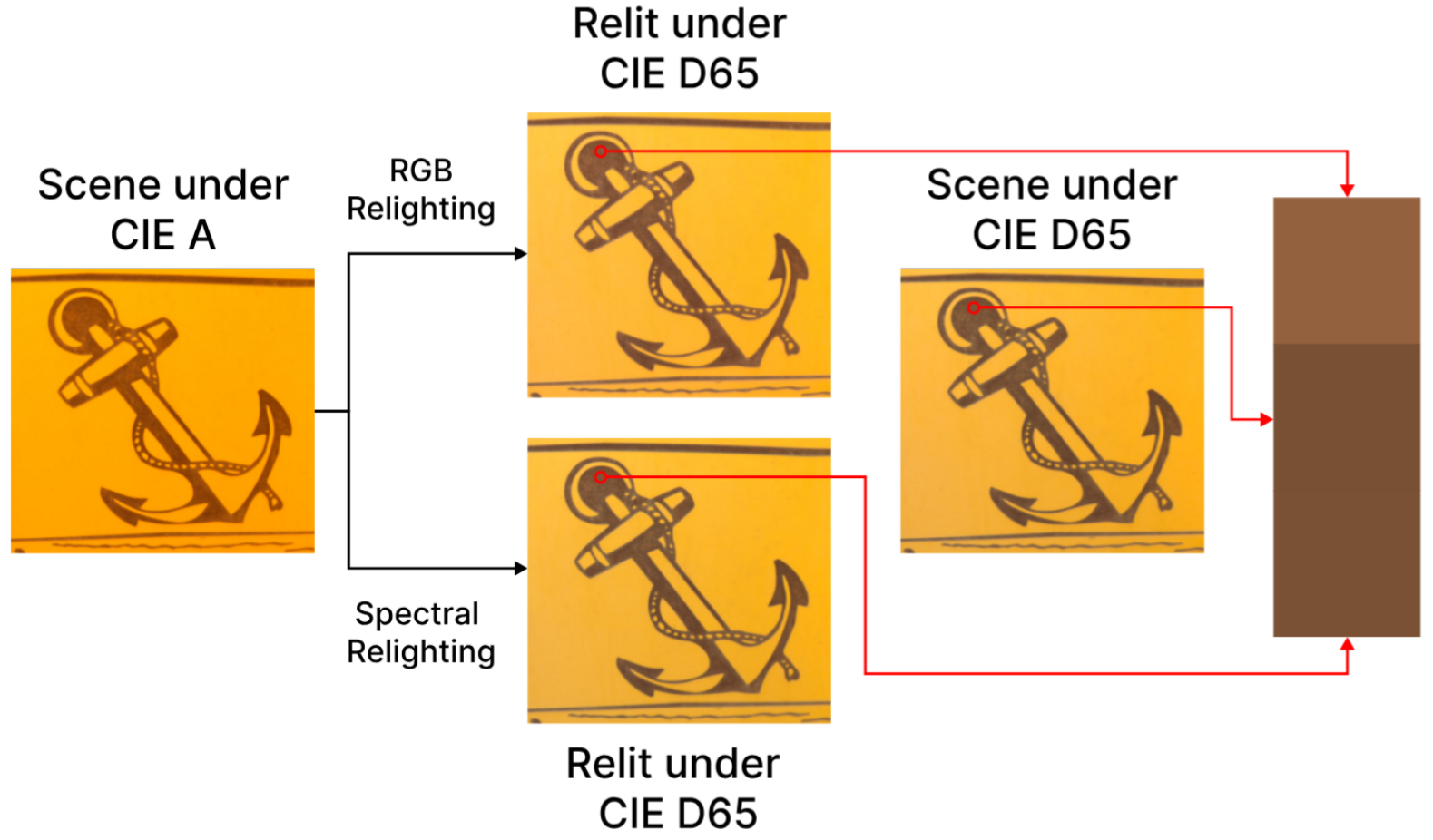

Object Color Relighting with Progressively Decreasing InformationLuca Cogo, Marco Buzzelli, Simone Bianco, and Raimondo SchettiniIn Journal of Physics: Conference Series, 2025Object color relighting, the process of predicting an object’s colorimetric values under new lighting conditions, is a significant challenge in computational imaging and graphics. This technique has important applications in augmented reality, digital heritage, and e-commerce. In this paper, we address object color relighting under progressively decreasing information settings, ranging from full spectral knowledge to tristimulus-only input. Our framework systematically compares physics-based rendering, spectral reconstruction, and colorimetric mapping techniques across varying data regimes. Experiments span five benchmark reflectance datasets and eleven standard illuminants, with relighting accuracy assessed via △E00 metric. Results indicate that third-order polynomial regressions give good results when trained with small datasets, while neural spectral reconstruction achieves superior performance with large-scale training. Spectral methods also exhibit higher robustness to illuminant variability, emphasizing the value of intermediate spectral estimation in practical relighting scenarios.

@inproceedings{cogo2025object, title = {Object Color Relighting with Progressively Decreasing Information}, booktitle = {Journal of Physics: Conference Series}, volume = {3128}, number = {1}, pages = {012007}, year = {2025}, doi = {10.1088/1742-6596/3128/1/012007}, url = {https://iopscience.iop.org/article/10.1088/1742-6596/3128/1/012007/meta}, author = {Cogo, Luca and Buzzelli, Marco and Bianco, Simone and Schettini, Raimondo}, keywords = {Spectral Imaging, Color Relighting}, } -

On the fair use of the ColorChecker dataset for illuminant estimationMarco Buzzelli, Graham Finlayson, Arjan Gijsenij, Peter Gehler, Mark Drew, Lilong Shi, Luca Cogo, and Simone BiancoIn Journal of Physics: Conference Series, 2025

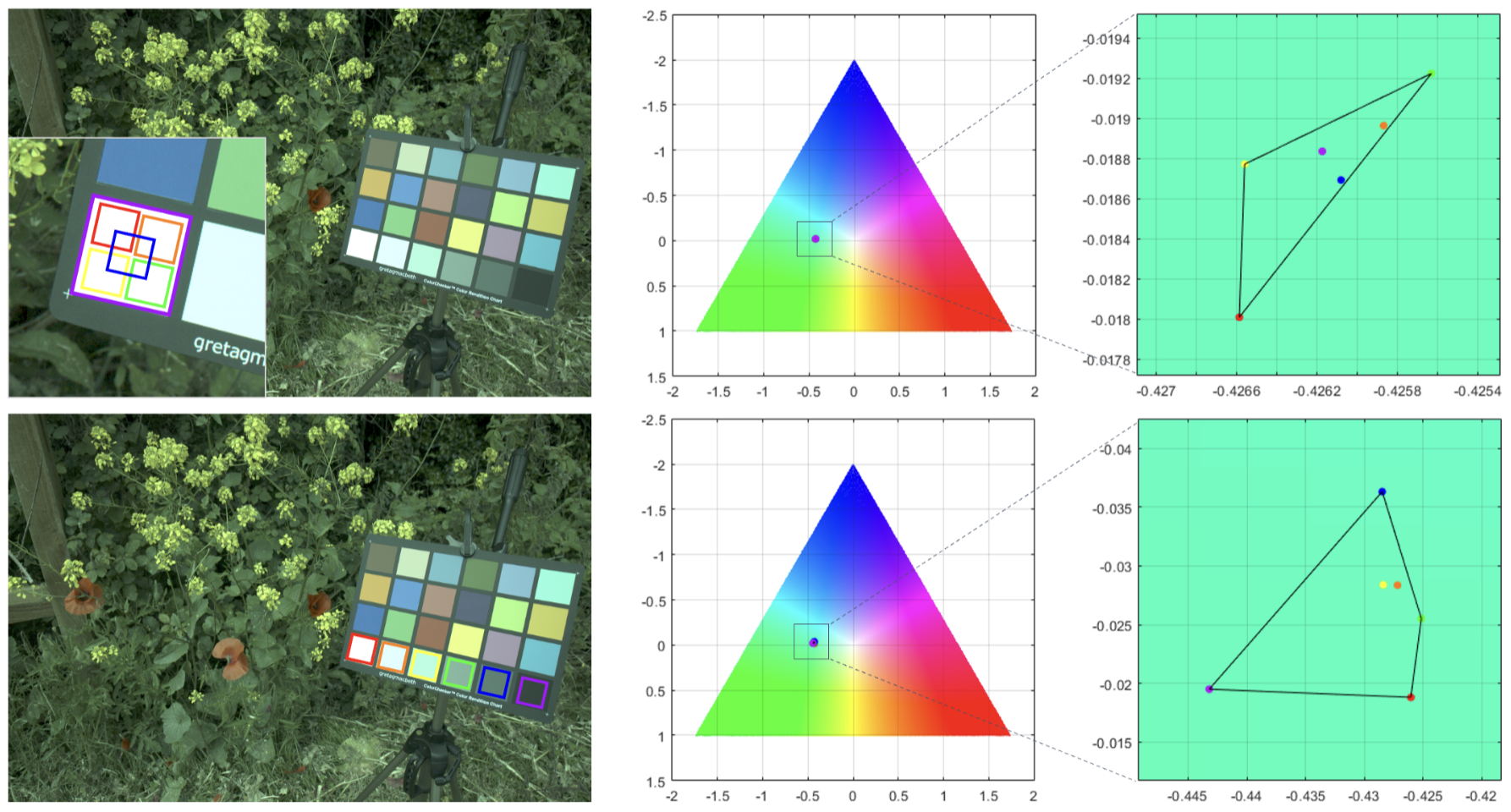

On the fair use of the ColorChecker dataset for illuminant estimationMarco Buzzelli, Graham Finlayson, Arjan Gijsenij, Peter Gehler, Mark Drew, Lilong Shi, Luca Cogo, and Simone BiancoIn Journal of Physics: Conference Series, 2025The ColorChecker dataset is the most widely used dataset for evaluating and benchmarking illuminant-estimation algorithms. Although it is distributed with a 3-fold cross-validation partitioning, no procedure is defined on how to use it. In order to permit a fair comparison between illuminant-estimation algorithms, in this short correspondence we define a fair comparison procedure, showing that illuminant-estimation errors of state-of-the-art algorithms have been underestimated by up to 33%. We also compute the lower error bounds that can be reached on this dataset, which demonstrates that the existing algorithms have not yet reached their maximum performance potential.

@inproceedings{buzzelli2025fair, title = {On the fair use of the ColorChecker dataset for illuminant estimation}, booktitle = {Journal of Physics: Conference Series}, volume = {3128}, number = {1}, pages = {012014}, year = {2025}, doi = {10.1088/1742-6596/3128/1/012014}, url = {https://iopscience.iop.org/article/10.1088/1742-6596/3128/1/012014/meta}, author = {Buzzelli, Marco and Finlayson, Graham and Gijsenij, Arjan and Gehler, Peter and Drew, Mark and Shi, Lilong and Cogo, Luca and Bianco, Simone}, keywords = {Illuminant Estimation, Computational Color constancy}, } -

Efficient Single Image Super-Resolution for Images with Spatially Varying DegradationsSimone Bianco, Luca Cogo, Gianmarco Corti, and Raimondo SchettiniIn International Conference on Image Analysis and Processing, 2025

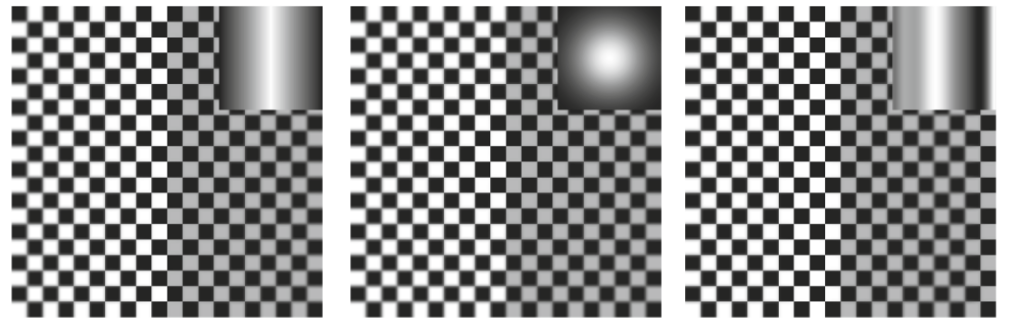

Efficient Single Image Super-Resolution for Images with Spatially Varying DegradationsSimone Bianco, Luca Cogo, Gianmarco Corti, and Raimondo SchettiniIn International Conference on Image Analysis and Processing, 2025Super-Resolution (SISR) is a computer vision task that aims to generate high-resolution images from their low-resolution counterparts. Typically, Super-Resolution methods use scaling factors of x2, x3, or x4 to uniformly enhance the resolution of the entire image. However, some acquisition devices, such as 360 cameras, produce images with nonuniform resolution across the frame. In this work, we propose to adapt state-of-the-art efficient methods for Single Image Super-Resolution to address the challenge of restoring images affected by spatially varying degradations. Specifically, we focus on the method that won the recent NTIRE 2024 Efficient Super-Resolution Challenge. For our experiments, synthetic images with different spatially varying types of degradation are generated, and the SISR method is specifically modified and trained to effectively handle such challenging scenarios. In addition to evaluating the developed method with traditional image quality metrics such as PSNR and SSIM, we also assess its practical impact on a downstream object detection task. The results on the WIDER FACE face-detection dataset, using the YOLOv8 object detection model, show that applying the proposed SISR approach to images with spatially varying degradations produces artifact-free outputs and enables object detectors to achieve superior performance compared to their application on degraded images.

@inproceedings{bianco2025efficient, title = {Efficient Single Image Super-Resolution for Images with Spatially Varying Degradations}, author = {Bianco, Simone and Cogo, Luca and Corti, Gianmarco and Schettini, Raimondo}, booktitle = {International Conference on Image Analysis and Processing}, pages = {234--246}, year = {2025}, organization = {Springer Nature}, } -

Robust camera-independent color chart localization using YOLOLuca Cogo, Marco Buzzelli, Simone Bianco, and Raimondo SchettiniPattern Recognition Letters, 2025

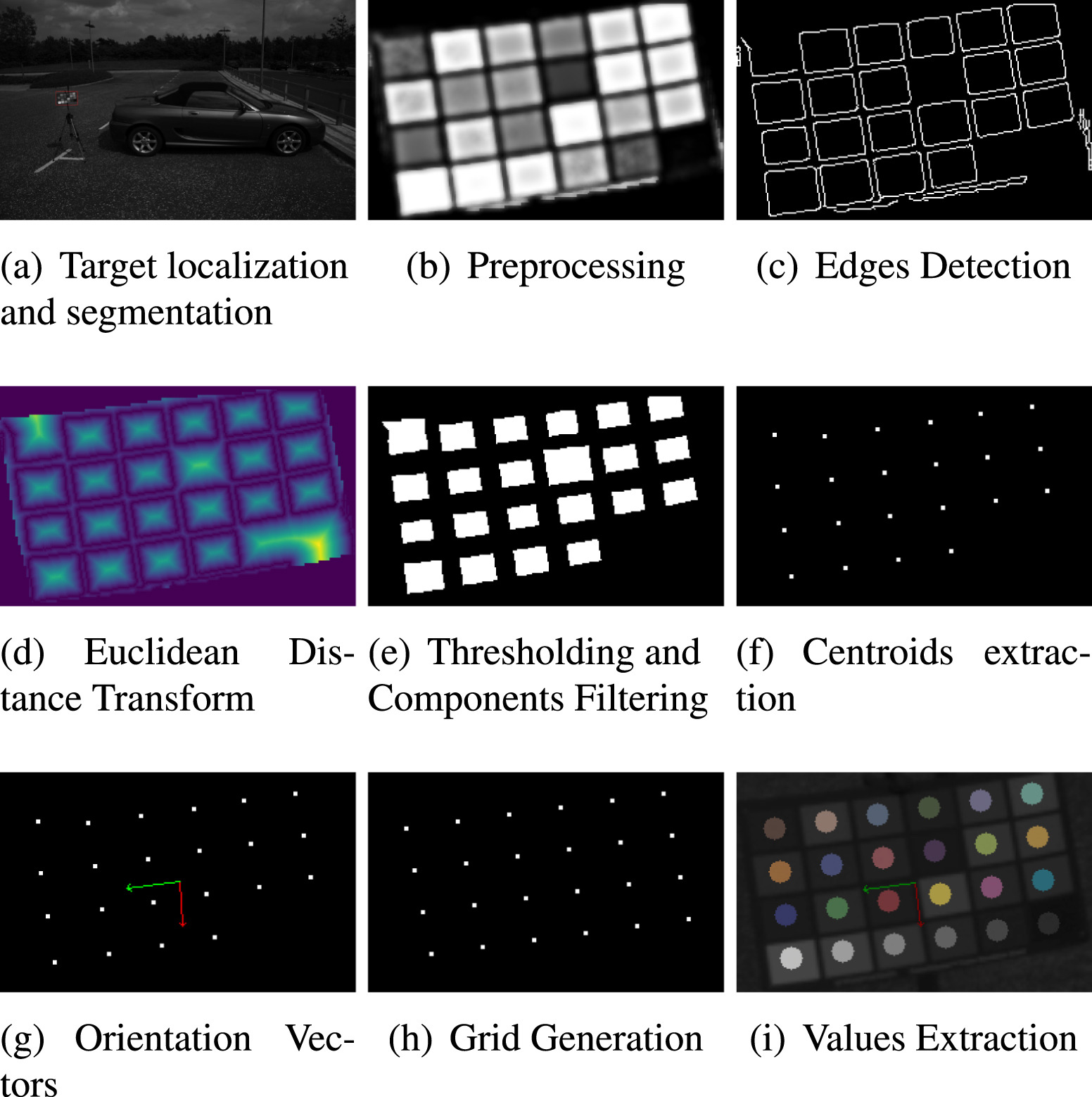

Robust camera-independent color chart localization using YOLOLuca Cogo, Marco Buzzelli, Simone Bianco, and Raimondo SchettiniPattern Recognition Letters, 2025Accurate color information plays a critical role in numerous computer vision tasks, with the Macbeth ColorChecker being a widely used reference target due to its colorimetrically characterized color patches. However, automating the precise extraction of color information in complex scenes remains a challenge. In this paper, we propose a novel method for the automatic detection and accurate extraction of color information from Macbeth ColorCheckers in challenging environments. Our approach involves two distinct phases: (i) a chart localization step using a deep learning model to identify the presence of the ColorChecker, and (ii) a consensus-based pose estimation and color extraction phase that ensures precise localization and description of individual color patches. We rigorously evaluate our method using the widely adopted NUS and ColorChecker datasets. Comparative results against state-of-the-art methods show that our method outperforms the best solution in the state of the art achieving about 5% improvement on the ColorChecker dataset and about 17% on the NUS dataset. Furthermore, the design of our approach enables it to handle the presence of multiple ColorCheckers in complex scenes. Code will be made available after pubblication at: https://github.com/LucaCogo/ColorChartLocalization.

@article{cogo2025robust, title = {Robust camera-independent color chart localization using YOLO}, journal = {Pattern Recognition Letters}, volume = {192}, pages = {51-58}, year = {2025}, issn = {0167-8655}, doi = {https://doi.org/10.1016/j.patrec.2025.03.022}, url = {https://www.sciencedirect.com/science/article/pii/S0167865525001138}, author = {Cogo, Luca and Buzzelli, Marco and Bianco, Simone and Schettini, Raimondo}, keywords = {Object detection, Color target, Pose estimation}, }

2024

-

RGB illuminant compensation using multi-spectral informationMirko Agarla, Simone Bianco, Marco Buzzelli, Luca Cogo, Ilaria Erba, Matteo Kolyszko, Raimondo Schettini, and Simone ZiniXIX Color Conference, 2024

RGB illuminant compensation using multi-spectral informationMirko Agarla, Simone Bianco, Marco Buzzelli, Luca Cogo, Ilaria Erba, Matteo Kolyszko, Raimondo Schettini, and Simone ZiniXIX Color Conference, 2024Multispectral imaging is a technique that captures data across several bands of the light spectrum, in this contribute we report our research related to its application to illuminant estimation an correction in RGB domains. In particular, we present 1. a method that exploits multispectral imaging for illuminant estimation, and then applies illuminant correction in the raw RGB domain to achieve computational color constancy. 2. A method that combines the illuminant estimation in the RGB color and in the spectral domains, as a strategy to provide a refined estimation in the RGB color domain. 3. A method that recovers as accurately as possible the spectral information of both the image and the illuminant using Spectral Super Resolution techniques, and exploits a weighted spectral compensation technique that optimizes compensate for possible spectral to perform effective color correction.